CS 180 Project 6: AR and Image Quilting

By Rhythm Seth

Overview

This project combines two exciting computer vision applications: augmented reality and texture synthesis. Part A focuses on placing virtual objects in real scenes through camera calibration and 3D projection, while Part B explores creating and manipulating textures using patch-based synthesis techniques.

Part A: AR Cube Project

Implementation of an augmented reality system that projects a synthetic cube onto video frames using camera calibration and 3D-to-2D projection techniques.

Point Correspondence and Tracking

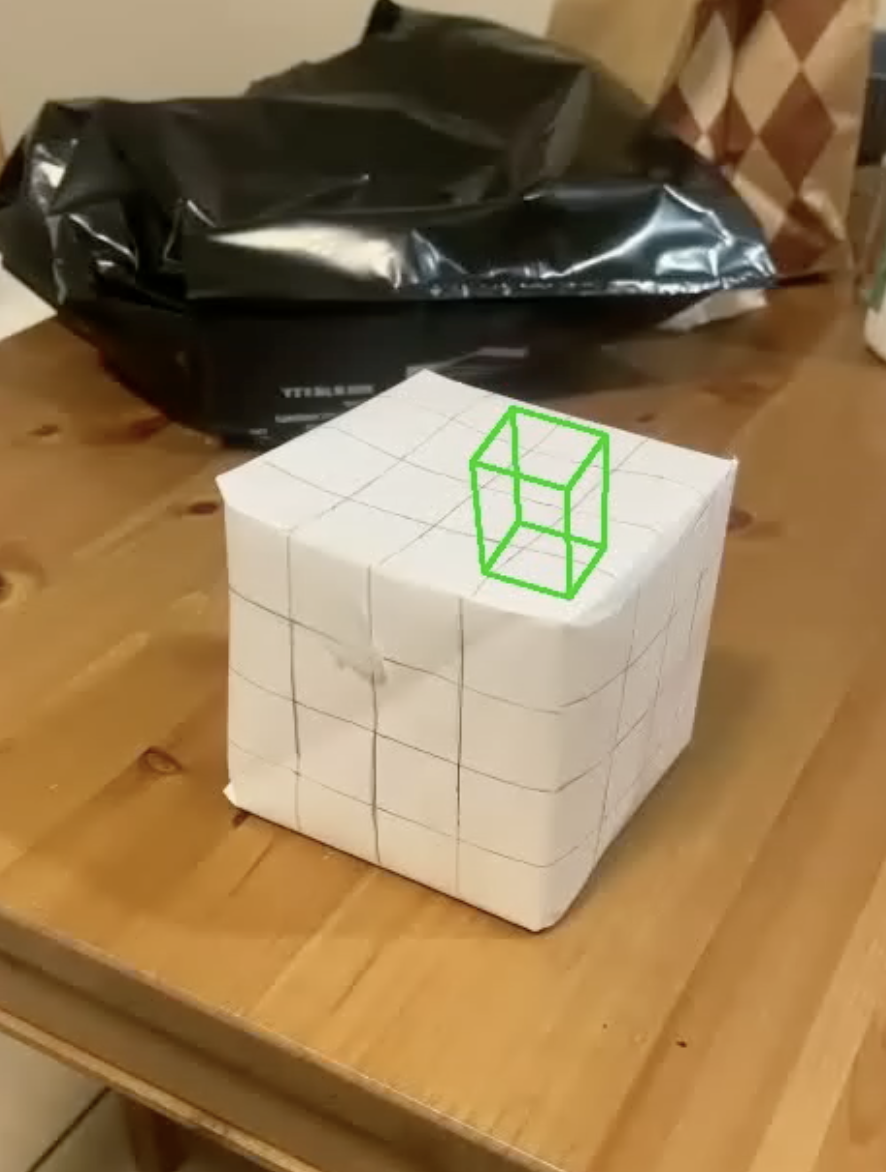

The AR implementation began with establishing point correspondences between 2D image coordinates and their corresponding 3D world coordinates. We carefully marked 20 points on the patterned box, ensuring proper ordering since each 2D image point needed to match its exact 3D world coordinate. The world coordinate system was centered at one corner of the box, with measurements taken to determine the precise 3D positions.

For tracking points across video frames, we implemented the CSRT (Channel and Spatial Reliability Tracking) tracker from OpenCV. Each point was tracked using a separate tracker instance, initialized with an 8x8 pixel patch centered around the marked point. The CSRT tracker proved robust in maintaining point tracking throughout the video sequence, adapting to changes in perspective and lighting.

Camera Calibration and Projection

Camera calibration was achieved by computing the projection matrix using Singular Value Decomposition (SVD). The process involved constructing a system of linear equations using the corresponding 2D-3D point pairs. The projection matrix maps 4D homogeneous world coordinates to 3D homogeneous image coordinates, effectively encoding both the camera's intrinsic parameters and its pose relative to the world coordinate system.

With the calibrated camera parameters, we rendered a virtual cube by projecting its vertices onto each frame. The cube's position and scale were adjusted relative to the world coordinate system centered on the box. Finally, the frames were combined into a video, demonstrating stable cube projection throughout the sequence despite camera movement.

Results

Part B: Image Quilting

This project implements the image quilting algorithm from the SIGGRAPH 2001 paper by Efros and Freeman. The goal is to perform texture synthesis (creating larger textures from small samples) and texture transfer (applying textures while preserving object shapes). Through increasingly sophisticated methods, we explore how to generate and manipulate textures effectively.

Random Sampling

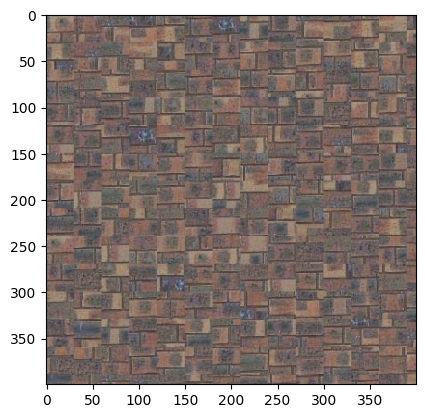

The simplest approach involves randomly sampling square patches from the input texture and placing them in a grid pattern. Using patch_size = 30 and output_size = (300,300), we created basic texture expansions. While straightforward, this method often produces visible seams and fails to maintain texture coherence.

Overlapping Patches

To improve coherence, we implemented patch sampling with overlapping regions. Each new patch is chosen based on its similarity to existing overlaps, measured using Sum of Squared Differences (SSD). Parameters: patch_size = 57, overlap_size = 5, tolerance = 3.

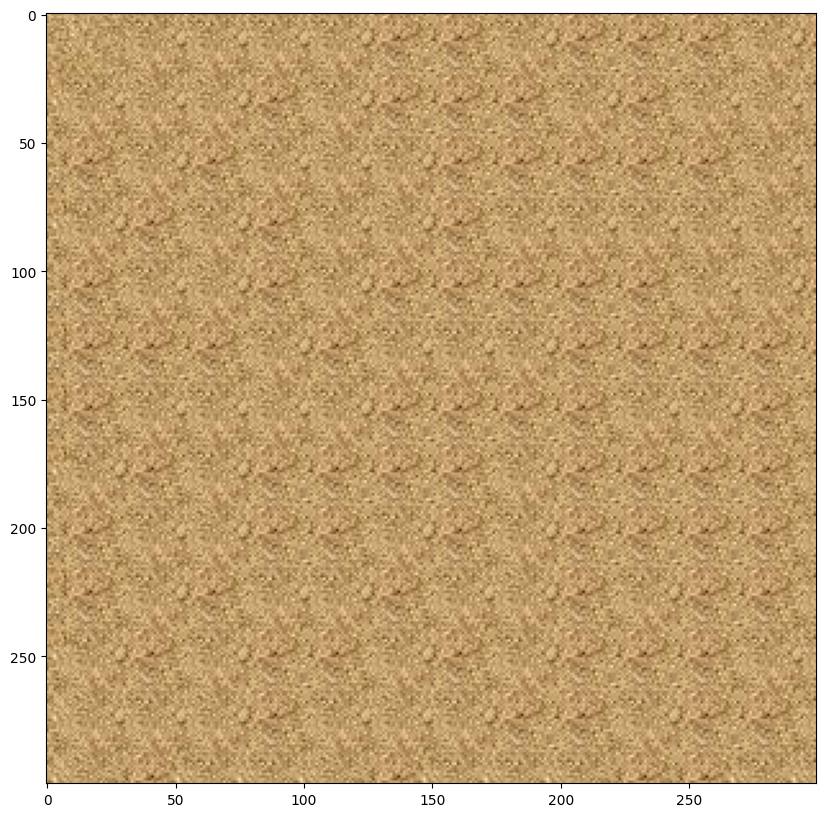

Seam Finding

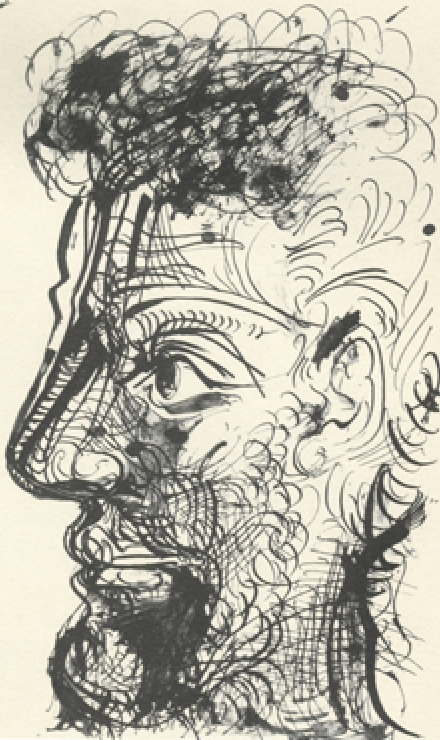

To eliminate visible edges between patches, we implemented a seam-finding algorithm that computes optimal paths through overlapping regions. Using parameters patch_size = 31, output_size = (300,300), overlap_size = 7, and tolerance = 2 for the first texture and patch_size = 71, output_size = (600,600), overlap_size = 20, and tolerance = 5 for the second texture and lastly patch_size = 19, output_size = (300,300), overlap_size = 5, and tolerance = 8 for the third image. We thus achieved seamless texture synthesis.

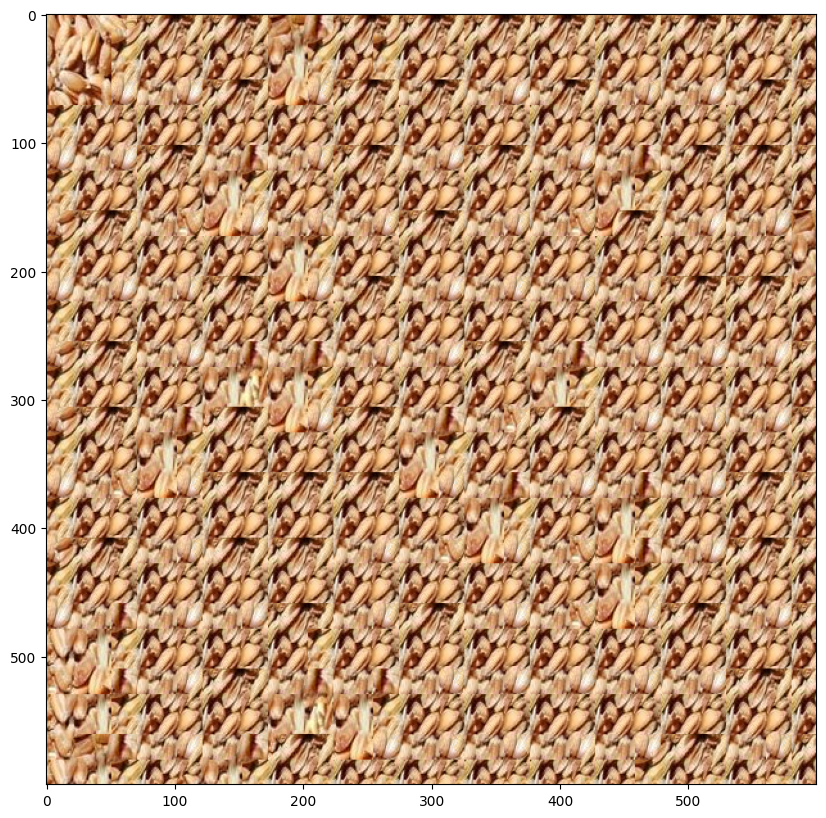

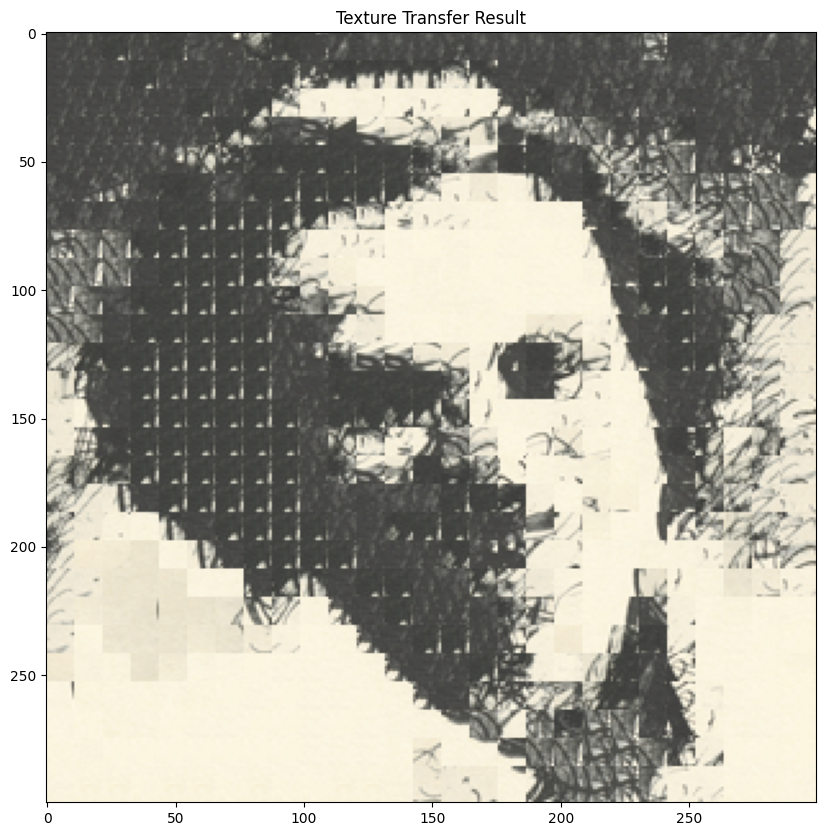

Texture Transfer

The final implementation takes a texture and applies it to another with target image guidance. Texture transfer extends Quilt Cut by introducing SSD_transfer which is the sum of squared differences (SSD) between the corresponding patch in the target image and the entire sample image. Using parameters patch_size = 13, overlap_size = 7, tolerance = 3, and alpha = 0.7, we successfully transferred textures while preserving target image structure.

Conclusion

This final project has provided comprehensive hands-on experience with two fundamental computer vision applications: augmented reality and texture synthesis. Through the AR implementation, I gained practical understanding of camera calibration, point tracking, and 3D projection - essential skills for creating mixed reality applications. The image quilting portion demonstrated the power of patch-based synthesis techniques and their applications in texture generation and transfer.

Key Learnings

AR Implementation

- Point correspondence and tracking using CSRT

- Camera calibration using SVD

- 3D-to-2D projection techniques

- Video processing and frame manipulation

Image Quilting

- Patch-based texture synthesis

- Seam finding algorithms

- Texture transfer techniques

- Image blending and composition

These implementations have not only enhanced my technical skills but also deepened my understanding of computer vision fundamentals, preparing me for future work in areas like mixed reality, computational photography, and image processing.